The digital world runs on content, and it is like a global town square where we share ideas with communities, and businesses thrive. Daily, we use social media to upload various types of posts and respond to others. Have you ever wondered who decides what stays online and what gets removed?. Here, content moderation comes in. It is the invisible force that protects us from the harmful aspects of social media.

Social media content rules are established by the social media giants to protect users and the platform’s reputation, while fostering a positive digital ecosystem. The main goal of its use is to provide a safe, secure, and engaging environment by filtering the harmful, offensive, or inappropriate content, such as hate speech, harassment, and explicit material.

When discussing content moderation, there is an equally important content moderation policy that establishes the rules and regulations governing what users can and cannot share on social media platforms. These policies don’t just protect the business legally; they shape digital culture, control brand reputation, and influence how users interact on social platforms.

In this article, I’ll break down the content moderation definition, its policies, and their impact on brand and user safety, marketing, and trust. Also discuss the future and best practices of content moderation. If you are curious about how online life is managed, this article provides you with an entire guideline on why content moderation matters in 2025.

What is content Moderation? Beginner-friendly guide

When you scroll Facebook and watch the video on TikTok or write a review on Amazon, you experience content moderation without realising it.

Social platforms, such as Facebook, YouTube, TikTok, and forum communities, employ content moderation to filter out harmful content, review every piece of content, and manage what people post, thereby making online life more secure and providing an excellent user experience.

In 2025, it is crucial to filter content because a billion people use social media and upload various types of data. Moderation helps protect user data, maintain trust, and ensure business compliance with online safety regulations in the US, UK, and beyond.

Content Moderation Definition in simple terms

Content moderation is the process by which social platforms review and manage data to ensure it is more secure, respectful, and compliant with relevant laws and regulations. It acts like filtering harmful content, such as hate speech, Adult Content, misinformation, spam, and Child abuse.

It follows the set of rules established by the social platform, such as YouTube’s content moderation policy, which prohibits uploading videos related to violent, dangerous, sensitive, or vulgar content, and also misleads the viewer.

If a person uploads this type of content without following the guidelines, their content may be removed, and in some cases, they may be banned from their channel.

Why does Online Content Need Content Moderation?

According to Backlinko’s statistics, as of July 2025, 5.41 billion people use social media. The online space continues to grow daily, with billions of users uploading content ranging from TikTok videos to Amazon reviews. Without proper content moderation, social platforms risk becoming unsafe, unreliable, and legally unverifiable.

Why do online platforms need Content Moderation

The critical factors that require platform moderation in modern days:

User trust and safety – preventing users from harmful material.

Brand reputation – companies cannot afford to post unacceptable or deceptive content.

Legal compliance – online privacy policies in the UK, USA, and the EU enhance the importance of online safety, necessitating clear moderation rules.

Artificial intelligence threats — including deepfakes, fake reviews, and AI-generated misinformation — are critical issues that must be closely monitored and addressed.

Moderation in social media, e-commerce, and communities.

Social Media: Instagram excludes the use of images of violence; TikTok has problematic challenges.

E-commerce: Platforms like Amazon and eBay use reviews to filter out claims about products being fake or untrue.

Online Communities: Reddit has human moderators and AI that ensure discussions remain civil and adhere to community rules.

Moderation is necessary not only for large companies interested in this type of network, but also for small firms that rely on blogs, forums, or customer communities, which may require a moderation policy to protect their audience and brand.

Types of Content Moderation and how they work

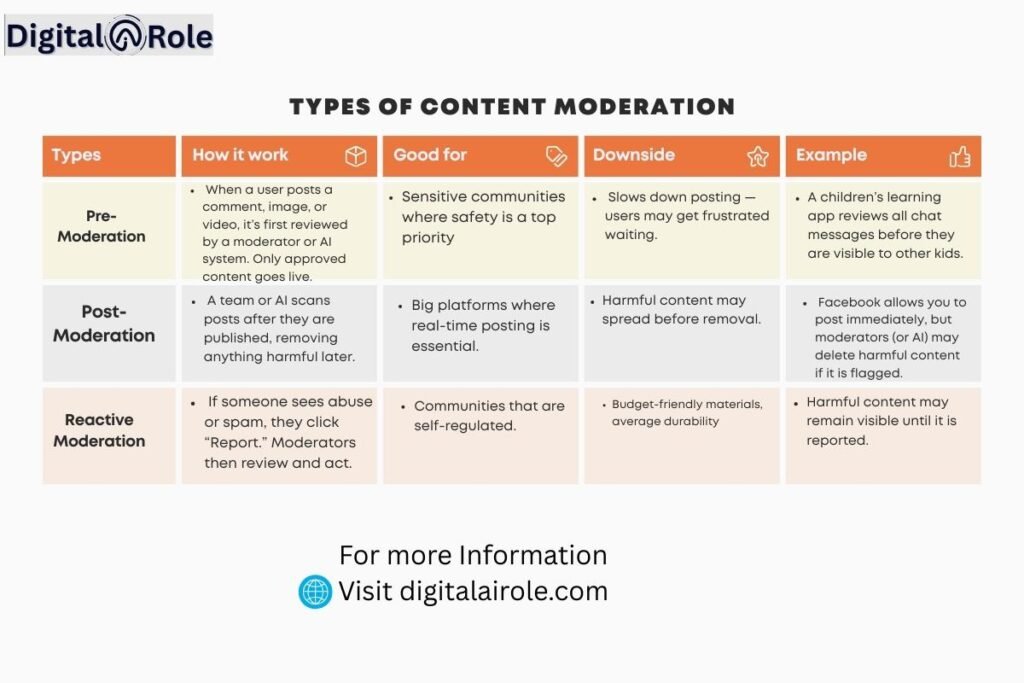

Content moderation doesn’t happen in just one way. Different platforms employ varying types of moderation, tailored to their content, audience size, and risk level. It can be divided into timing-based methods, including pre-, post-, and reactive, as well as methods that moderate AI versus human or hybrid moderation. Understanding these types of business helps to choose the right strategy.

Pre-moderation Content

Content is reviewed and checked before publication for pre-employment purposes. This prevents the harmful content from being seen by users, but can slow down user interaction.

Example: The pre-moderation technology of children’s apps, such as YouTube Kids, prioritises safety over timeliness through moderation filters.

Post-moderation Content

It is posted immediately and later reviewed for accuracy and quality. It enables quick communication, but also carries the risk of viewing potentially dangerous content within a short period.

Examples: On Facebook, post-moderation of live streams is frequent, where snipers will preview existing material shortly after it is uploaded to the platform.

Reactive moderation

Material remains online until it is reported by the user, at which point moderators intervene. It is a relatively inexpensive technique that requires significant community involvement.

Example: Reddit heavily utilises reactive moderation, where users report posts that violate the rules’ parameters, which are then brought to the attention of moderators.

Both methods have trade-offs, and the pioneers of several platforms strive to strike a balance between speed and safety.

AI Moderation vs Human Moderation: Strengths and Weaknesses

AI moderation

With the specific goal of identifying spam, nudity, hate speech, or misinformation, AI moderation algorithms are used to detect these issues automatically. It’s fast, scalable, and works 24/7.

Advantages: Speed, cost-effective, and manages billions of posts.

Disadvantages: May be misunderstood in situations (e.g., flagged as hate speech due to satire).

For example, on Instagram, AI helps block explicit images before they are posted.

Human moderation involves human personnel reviewing flagged content. The human character understands more in the theme of culture, sarcasm, or sensitive issues.

Advantages: Expertise in complicated matters, choices made through empathy.

Cons: Moderators find it emotionally draining, expensive, and slow.

Examples: TikTok employs thousands of human moderators worldwide to review videos that appear suspicious, utilising AI.

Hybrid models

The reasons why automation evolution is mixed with human judgment on the platform are.

No system is perfect. That is why most platforms nowadays opt for hybrid moderation, utilising AI to handle the bulk of the work and human reviewers to address edge cases.

Case in point: YouTube needs AI just to automatically flag harmful videos, whereas a final decision is made on an appeal by a human. This guarantees speed and justice.

For small businesses, hybrid moderation can also be beneficial.

Artificial intelligence can prevent spam or other unwanted messages that are easy to detect, whereas businesspeople or community administrators can analyse user feedback to make more nuanced choices.

The hybrid model integrates two worlds: efficient automation and human intervention, which ensures correctness and fairness.

Purpose of Content Moderation in the Digital Era

Today, online platforms are the go-to places for shopping and chatting. Now, this evolves into digital communities where people connect, share, and build trust. So it’s necessary to keep these places safe, secure, and reliable; the platform needs content moderation. Its purpose goes beyond deleting the offensive information; it helps protect users, strengthen business, and create a positive user experience.

1. Shielding users against malicious or unlawful materials

The internet can make people do some scary stuff at times: hate speech, bullying, scamming, and even crime. The role of moderating content serves as a filter, helping to block harmful forms of content before they are disseminated.

Examples: In 2024, TikTok implemented more stringent censorship to eliminate bad so-called challenges that promoted unsafe actions amongst adolescents. The proactive nature was not only suitable for risk reduction, but it has also ensured that parents and educators know the site takes the safety of its users seriously.

To consumers, this translates to safer internet usage, where people are less likely to fear being targeted, harassed, or deceived.

2. Establish trust and business image.

Trust cures all businesses: whether you are operating in a large market between continents, or something small on the internet. Clients will likely be deterred from becoming involved in a site that is replete with abusive comments, positive reviews, or misleading information.

Case study

Amazon has invested heavily in AI and human moderators to combat fake reviews. It additionally blocked more than 200 million suspicious reviews, which helped shoppers make better decisions in 2023 alone. This not only keeps customers safe but also enhances Amazon’s reputation as a reliable trading platform.

With a clear content moderation policy, the company demonstrates its commitment to safety and quality, a key indicator of brand loyalty.

3. Impact on customer interaction and retention

A fair and moderate approach gives users the sense of being heard, respected, and secure. As soon as customers trust the possibility of communicating without fear of abuse and fraud, their communications will be more lasting and customer-loyal.

An example is Reddit, which employs both AI and volunteer moderators to maintain discussions at a respectful level. The high-quality discussions and user trust of subreddits with good moderation (such as r/science) can be contemplated, and poorly moderated forums usually fail because of the toxic users.

It demonstrates that the user experience, in turn, is directly influenced by content moderation, which ultimately leads to customer loyalty and long-term business success.

Content Moderation Policy: What It is and Why it matters

Content moderation is not only about removing harmful content, but also requires an explicit and well-written set of rules and guidelines that can determine what can and cannot be posted on a particular platform. This model is referred to as a content moderation policy, and it forms the basis of secure and dependable internet environments.

Adopting an effective policy on content moderation

Content moderation policy is a rulebook that informs users about the type of behaviour, language, and content a particular platform permits. It also references moderators and AI technologies for appropriate responses in cases of rule violations.

Simply, it answers three major overarching questions:

What should be allowed? (constructive comments, product review, discussion, etc.)

What is censored? (e.g., hate speech, over-the-top violence, scams, false health claims)

How do we deal with somebody who causes a violation of regulations? (warnings, removal, suspension, or banning)

To illustrate, an e-commerce site of modest size can prohibit fake reviews of products, whereas Facebook, operating on a global level, prohibits hate speech and terrorist propaganda. Both are policies, only varying in scale.

Small business tip: Despite having a blog and comment section, it’s essential to have a moderation policy in place, on a basic level, to prevent spam, abusive comments, and damage to your reputation.

Compliance with the laws and regulations (UK, USA specialisation)

By 2025, it will no longer be possible to maintain a moderation policy (it will be legal in many cases). The UK, the USA, and governments in Europe have developed stricter internet safety guidelines, particularly for websites whose primary content is user-generated.

UK: The Online Safety Act (2023) will also stipulate the quick removal of illegitimate content and clear reporting structures for platforms. Any failure may result in massive fines by Ofcom (the regulator of the UK online).

USA: The U.S. remains protected by platforms under the Communications Decency Act Section 230; yet, as pressure mounts on this act politically, businesses must prove they are taking active measures to purge harmful content.

Coupled with additional obligations on businesses with audiences under 13, the Children’s Online Privacy Protection Act (COPPA) imposes an extra responsibility on them.

In the case of businesses, it is not only due to the need for relief that policies and these regulations must align, but also to preserve trust. People in the UK and the USA would utilise the services that demonstrate objectiveness in their policies.

Case study: models of content moderation policy on the major platforms.

Facebook (Meta)

The moderation system of Facebook has one of the most elaborate, and it is named Community Standards. These include violence, hate speech, nudity, and misinformation. Their accounts of transparency suggest a strategy aimed at reducing the number of posts flagged and deleted, thereby generating accountability.

TikTok

The principles of TikTok’s Community Guidelines place a strong emphasis on user safety, particularly for young users. They prohibit threats and fake news, and promote cognitive well-being by emphasising safe practices (e.g., warnings against misinformation).

Reddit is generally conservative in nature, with a site-wide policy that aims to avoid harassment, unlawful material, and spam. Additionally, subreddits voluntarily publish sub-specific rules of conduct, which volunteer moderators monitor. The centralised and community-driven policy gives Reddit a distinct differentiation.

Amazon & E-commerce

Amazon’s moderation team deals with fake reviews, counterfeits, and fraud. They employ an artificial intelligence method combined with human reviewers to provide alerts about potential manipulation. This policy concerns protecting the consumer’s trust in transactions, rather than speech.

The Censorship of the Internet and the Direction of Online Life

Content moderation will always strike a balance between the freedom of speech and the safety of the user. When it is controlled too much, it seems that it is censorship and when controlled too little, it seems that hate speech, misinformation and abuse are allowed to proliferate. On Facebook and Reddit, such platforms are scrutinised in the UK and the USA as a means to secure expression and maintain communities.

The Implications to Marketing, SEO, and Brand Communication

To companies, moderation fosters goodwill and a strong presence. Brands do not wish to be put directly adjacent to harmful material. Search engines also rank sites that have safe and high-quality content, which in turn increases their SEO ranking. Open regulations safeguard reputations and enhance brand communication.

Psychological Effect on Moderators and Users.

Moderators can also be stressed by looking at abusive material, and in other cases, it can make the users feel like they are being censored when it becomes too much. The key aspect of creating a balance is a sense of security, confidence, and involvement in the process, which defines the culture of sharing, selling, and relating online.

Conclusion

In this article, I explain what content moderation is and how it shapes online life. In simple terms, content moderation filters out harmful content that does not comply with the platform’s guidelines. This helps the user and the platform to build their authority and trust towards each other. E-commerce and social platforms employ content moderation to ensure their platforms are trustworthy and compliant with legal requirements. This can apply to the different types of moderation, which include pre-moderation, post-moderation, and reactive moderation. However, some try to combine human and AI moderation to smooth the process.

Content moderation policy matters because it tells what stays online and what is removed. Many countries, such as the US and the UK, define which type of content users should upload or not. Many countries’ governments also decide on their rules and policies, which apply specifically to their country, to regulate content. Every social platform defines its Guidelines for users to follow.